Research Progress

Recently, a research group led by Prof. ZHU Yongxin from Shanghai Advanced Research Institute of Chinese Academy of Sciences has unveiled Bit-Cigma, a revolutionary generic matric multiplication accelerator hardware architecture that optimizes bit-sparsity, ensures zero-error accuracy, and remodels floating-point computations.

The results were published in Feb 2025 in IEEE Transactions on Computers, a top-tier journal in the field of computer architecture.

Matrix multiplication underpins artificial intelligence (AI) and scientific computing, driving processes such as neural network training and complex simulations. These fields demand efficient computing power capable of both floating-point (FP) and quantized integer (QINT) operations with exceptional performance and accuracy.

However, the development of efficient matrix multiplication accelerators faces two persistent challenges. First, the inherent bit-level redundancy in binary data representation wastes computational resources and limits computational efficiency. Second, the floating-point exponent matching relies on slow, resource-heavy methods that bottleneck throughput and compromise accuracy.

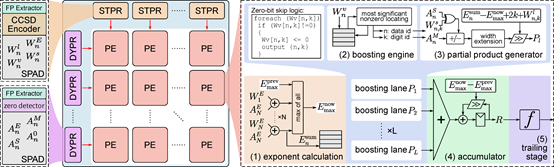

Bit-Cigma is a scalable, bit-level sparsity-aware architecture designed to handle various datatypes while delivering superior performance, accuracy, and efficiency for matrix multiplications across diverse tasks. The researchers proposed the Compact Canonical Signed Digit (CCSD) encoding technique, a streamlined on-chip method that slashes redundant computations by maximizing bit-level sparsity, all at half the cost of traditional approaches. For large matrices, the team devised a segmented approach that splits data into manageable blocks and aligns floating-point exponents dynamically. This ensures pinpoint accuracy and boosts processing speed and throughput without taxing hardware resources.

Extensive experiments demonstrate that Bit-Cigma, utilizing CCSD, achieves a performance boost of 3 to 4 times and improves efficiency by over 10 times compared to state-of-the-art FP and QINT accelerators. Additionally, Bit-Cigma achieves zero computational error, a feat not matched by other accelerators.

The Bit-Cigma architecture and the CCSD technique pave the way for more efficient, high-performance solutions for generic matrix multiplication. These advancements promise to support a range of applications and set the foundation for future hardware-centric high-performance systems.